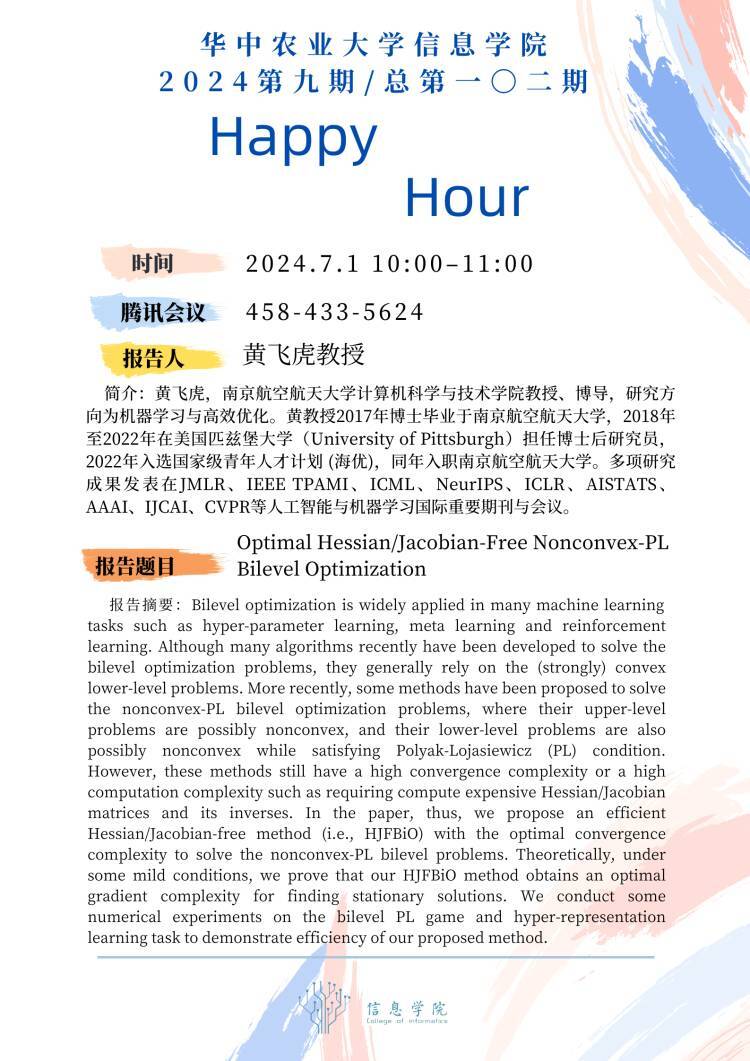

信息学院2024第九期/总第102期Happy Hour

时间:7.1上午10:00-11:00

腾讯会议:458-433-5624

报告题目:

Optimal Hessian/Jacobian-Free Nonconvex-PL Bilevel Optimization

报告人:黄飞虎教授

黄飞虎,南京航空航天大学计算机科学与技术学院教授、博导,研究方向为机器学习与高效优化。黄教授2017年博士毕业于南京航空航天大学,2018年至2022年在美国匹兹堡大学(University of Pittsburgh)担任博士后研究员,2022年入选国家级青年人才计划(海优),同年入职南京航空航天大学。多项研究成果发表在JMLR、IEEE TPAMI、ICML、 NeurIPS、 ICLR、AISTATS、AAAI、IJCAI、 CVPR等人工智能与机器学习国际重要期刊与会议。

报告摘要:

Bilevel optimization is widely applied in many machine learningtasks such as hyper-parameter learning, meta learning and reinforcementlearning. Although many algorithms recently have been developed to solve thebilevel optimization problems, they generally rely on the (strongly) convexlower-level problems. More recently, some methods have been proposed to solvethe nonconvex PL bilevel optimization problems, where their upper-levelproblems are possibly nonconvex, and their lower-level problems are alsopossibly nonconvex while satisfying Polyak-Lojasiewicz (PL) condition.However, these methods still have a high convergence complexity or a highcomputation complexity such as requiring compute expensive Hessian/Jacobianmatrices and its inverses. In the paper, thus, we propose an efficientHessian/Jacobian-free method (i.e., HJFBiO) with the optimal convergencecomplexity to solve the nonconvex- PL bilevel problems. Theoretically, undersome mild conditions, we prove that our HJFBiO method obtains an optimalgradient complexity for finding stationary solutions. We conduct somenumerical experiments on the bilevel PL game and hyper-representationlearning task to demonstrate efficiency of our proposed method.